THE FUTURE OF ENTITY DUE DILIGENCE

/

Introduction

The world has gone through an incredible amount of technological transformation over the past ten years. While it may seem hard to imagine that change will continue at this pace, it’s not only likely to continue, but it will accelerate. There are various functional areas within institutions that support global commerce, but some have been laggards in adopting new technology for a plethora of reasons.

Structural market trends will force organizations to innovate or they will be subject to consolidation, reduction of market share, and, in some circumstances, complete liquidation. Future proofing the entity due diligence process is one key functional area that should be part of an organization's overall innovation road map because of the impacts of trends such as: rising regulatory expectations, disruptive deregulation initiatives, emergence of novel risks, explosion of data, quantifiable successes in artificial intelligence (AI), and changing consumer expectations.

Entity due diligence continues to be a struggle for many financial institutions as regulatory requirements such as beneficial ownership continue to expand in breadth and depth. One of the fundamental struggles to resolve is the identification of the entity with the information used during the due diligence process which can be scattered across various data sources, manual to access and screen, and at times riddled with data quality issues.

The future of entity due diligence is not written in stone, but as will be argued later there is an opportunity to shape its future. The future of entity due diligence is not only about shaping its future, but also includes us, people, and the role we play in that future.

This paper will outline some of the key trends that will drive the transformations of the entity due diligence process and what the future could start to look like.

Modeling Possible Futures

To understand the future entity of due diligence, we should attempt to understand, to a certain degree, some of the history which led up to its current state. While it would be impractical to document every historical incident which led up to the current legislative and institutional frameworks, it is useful to imagine entity due diligence as a result of actions and interactions among many distributed components of a complex adaptive systems (CAS).

The study of CAS or complexity science emerged out of a scientific movement where the goal was to understand and explain complex phenomena beyond what the traditional and reductionist scientific methods could offer. The movement’s nerve center is the Santa Fe Institute in New Mexico, a trans-disciplinary science and technology think tank, which was founded in 1984 by the late American chemist, George Cowan. Researchers at the institute believe they are building the foundational framework to understand the spontaneous and self-organizing dynamics of the world like never before. The institute’s founder, Mr. Cowan, described the work they are doing as creating, “the sciences of the 21st century.”

CAS are made up of a large number of components, sometimes referred to as agents, that interact and adapt. Agents within these systems adapt in and evolve with a changing environment over time.

CAS are complex by their very nature, meaning they are dynamic networks of interactions, and the behavior of the individual components doesn’t necessarily mean the behavior of the whole can be predicted, or even understood. They are also adaptive so that the individual and collective behavior can self-organize based on small events or the interaction of many events. Another component of these systems is that emergent patterns begin to form which can be recognized. e.g. formation of cities

Another key element of CAS is that they are intractable. In other words, we can’t jump into the future because we need to go through the steps. The white line in the image below shows the steps of a system from the past to the present, and the red dendrite like structures are other possible futures that could have happened, if a certain action was taken at a particular point in time.

Source: YouTube TEDxRotterdam - Igor Nikolic - Complex adaptive systems

These models suggest that no one knows everything, can predict everything, or is in total control of the system. Some entities have greater influence over the evolution of the system as a whole than others, but these models imply that everything can influence the system, even a single person.

The entity due diligence process involves many agents interacting and responding to one another which include, but are not limited to: financial institutions, companies, governments, corporate registries, formation agents, challenger firms, criminals, terrorists, and many more. By examining the complexity of the due diligence space, and how technology is constantly reforming agents relationships to one another, can firms, and people within those firms, help chart a course for the future of due diligence and their place within it?

The Evolution of Criminals and Terrorists and Reactions from Law Enforcement

The history of the anti-money laundering (AML) regime in the United States could be understood through the lens of a CAS. Before any AML legislation existed the US government prosecuted criminals such as Al Capone, for tax evasion as opposed to other crimes.

In 1970, the US passed the Banks Records and Foreign Transactions Reporting Act, known as the Bank Secrecy Act (BSA), to fight organized crime by requiring banks to do things such as report cash transactions over $10,000 to the Internal Revenue Service (IRS).

Another key development in the history of the AML regime was the prosecution of a New England bank for noncompliance with the BSA, another seemingly small event, had far reaching consequences as it prompted Congress to pass the Money Laundering Control Act of 1986 (MLCA).

But as we know today, the US government passed the BSA, but criminals and criminal organizations continue to evolve, as cash structuring or using money mules are common methods to avoid the reporting requirement.

Drug Cartels

Clearly, the rise of the Colombian and Mexican drug cartels in the 1970s and 80s show how agents of a CAS act and react to one another. In 1979, Colombian drug traffickers were killed in a shootout in broad daylight in Dadeland Miami mall. This event and many others clearly got the attention of US law enforcement and in 1982, the South Florida Drug Task force was formed with personnel from the Drug Enforcement Agency (DEA), Customs, Federal Bureau of Investigation (FBI), Bureau of Alcohol, Tobacco, Firearms and Explosives (ATF), Internal Revenue Service (IRS), Army, and Navy.

By the mid 1980s, as the South Florida Drug Task force started to succeed in reducing the flow of drugs into the US via South Florida, the Colombian cartels reacted and outsourced a lot of the transportation of cocaine to the US via Mexico with the help of marijuana smugglers.

Mexican drug cartels continue to evolve and innovate as they have reportedly used unmanned aerial vehicles (UAV), more commonly referred to as drones, to fly narcotics from Mexico over the southwest border to San Diego and even experimented with weaponizing drones with improvised explosive device (IED) equipped with a remote detonator.

9/11 and the Evolving Threat of Terrorism

The above examples pale in comparison to the impacts of the 9/11 terrorist attacks on the United States that killed almost 3,000 people and caused billions of dollars in property damage, economic volatility, cleanup costs, health problems for people living or working near the site, job loss, tax revenue loss, and many other cascading effects. Shortly after the 9/11 terror attacks, Congress passed the Patriot Act which was designed to combat terrorism, including its financing.

However, as the US and other countries have enacted laws to prevent the funding of terrorism, terrorists have reacted or evolved by opting to use legitimate funding sources such as government benefits, legitimate income, and small loans to launch low cost attacks. The French government estimated the November 2015 Paris attacks cost a maximum of 20,000 euros.

Vehicles have been used as ramming weapons in terror attacks in London, Berlin, Nice, Barcelona, and New York which simply amounted to the cost of fuel, and possibly a rental charge. This also points to the changing nature of the types of people who are engaging in these types of attacks that usually have a criminal background and are radicalized by content online as opposed to operating within a well financed and organized cell of a larger terror group.

But is it only the content online that radicalizes people or does most terrorist recruitment happen face-to-face? According to research by Washington University’s Professor Ahmet S. Yahla, Ph.D., just over 10 percent of the 144 people charged with ISIS-related offenses in US courts were “radicalized online.” Professor Yahla ran a program, in a Turkish city on the southern border with Syria, to intervene with school-aged children at risk for recruitment by terrorist organizations. Professor Yahla found that most families weren’t aware that their children were being approached by terrorist recruiters and were open to intervening to ensure their children were not radicalized.

The Professor’s assertion that most of terrorist recruitment happens face-to-face seems to make sense because we have all experienced the power of a personal referral. If, a person you like and trust makes a recommendation to you then you are much more likely to act on it, rather than be prompted to act through some passive media online. This also implies that networks of people exist, in countries where attacks take place, that believe in various terrorist ideologies, but not all of those believers take up arms.

Naturally, this leads to the idea that even if internet service providers (ISP) and social media companies could remove a lot of the terrorist propaganda online, it wouldn’t stop so called ‘lone wolf’ terror attacks as the system would evolve and rely more on face-to-face recruitment as small communities of people with shared beliefs and values find ways to congregate.

However, this doesn’t mean that laws such as the Patriot Act are not effective against preventing terrorism because they are without a doubt, they are creating barriers against large scale terror attacks, but terrorism and its agents are constantly evolving. While the Patriot Act and similar laws allow for broader surveillance powers by governments, terrorists know this and have reacted by using encrypted messenger applications such as the Telegram to communicate and spread propaganda.

All of this suggests that the fight against terrorism needs to be a multi-pronged approach including, but not limited to preventing terror groups access to the financial markets, intervening early with at-risk youth, tackling various socio-economic issues that could contribute to feelings of alienation of impressionable youth, and countering compelling social media delivered by ISIS through public private partnerships (PPP) by launching an ongoing and strategic counter-narrative.

Drivers of Regulation

The motivations for new regulations can come in many different forms such as combating terrorism, drug trafficking, money laundering, tax evasion, securities fraud, financial fraud, acts of foreign corruption, etc. As discussed in the previous section, terrorism continues to evolve as there have been a growing number of low-cost of attacks which will keep it at the top of political agendas for years to come.

Data and technology are integral parts of what are driving new regulations on various fronts. The rise of smartphones, explosion of data, proliferation of the internet of things (IOT), and other sensor technology has fundamentally changed the speed at which data can be accessed, transferred, analyzed, and acted upon. There will be various regulatory rules examined later in this paper which, in one way or another, can be linked to technological transformations.

The most pressing example of how technological transformation can influence regulation can be observed with the Panama Papers data leak. While it could be argued that it was simply the actions of one person, or a group of people, the leak would only be possible if the technological framework was already in place to store, distribute, and analyze all of those documents rapidly and truly understand the implications of them.

Would the Panama Papers data leak even be possible in 1940?

Atomic Secrets Leak

Maybe not on the scale of the Panama Papers, but data leaks are not something completely new as several Americans and Britons helped the Union of Soviet Socialist Republics (USSR) become a nuclear power faster than it could on its own, by leaking military secrets. Some scientists contend that the USSR and other countries would have obtained a nuclear bomb on their own, but the data leaks likely accelerated the process by 12 to 18 months or more.

Klaus Fuchs is commonly referred to as the most important atomic spy in history. Fuchs was born in Germany and was actively engaged in the politics of the time. Fuchs immigrated to England in 1933 and earned his PhD from the University of Bristol. Eventually, he was transferred to Los Alamos labs in the early 1940s where he began handing over important documents about the nuclear bomb’s design and dimensions to the USSR. There were other spies giving nuclear secrets to the USSR with various motivations such as communist sympathies or thinking that the more countries that had access to nuclear technology would decrease the probability of nuclear war.

The amount of nuclear secrets leaked to the USSR may have not been that massive in terms of storage space if we imagine all of those documents being scanned as images or pdf files, but the implications of the USSR having that information during World War II was very serious. So, data leaks have not emerged out of nowhere, but the scale and speed at which information can be distributed is, clearly, very different today than it was 70 years ago. It’s much easier to stick an universal serial bus (USB) drive into a computer rather than trying to walk out of an office building with boxes full of files.

The Data Leak Heard Round the World

The Panama Papers had a tsunami effect on global regulations as it prompted countries around the world to re-evaluate their corporate registry requirements and the use of shell companies to hide beneficial ownership. The first news stories about the Panama Papers leak appeared on April 3, 2016 and just over a month later the US Financial Crimes Enforcement Network (FinCEN) issued the long awaiting Customer Due Diligence (CDD) final rule. The magnitude of the Panama Papers is revealed in the sheer volume of documents, entities, and high profile individuals involved as shown in the image below.

Source: International Consortium of Investigative Journalists

Data is a significant part of the driver for new regulation which stem from a wide range of activities including money laundering, corruption, human trafficking, tax evasion, etc. There are a wide variety of regulatory rules, which institutions had to prepare for across the globe, coming into full force into 2018 such as the Payment Services Directive (PSD2) in the European Union, the CDD final rule, the New York State Department of Financial Services (NYDFS) risk based banking rule, and others.

The impact of the Paradise papers is still yet to be fully realized, but it's fair to assume that it will contribute to the trend of increased regulation and scrutiny of the financial services industry. Whether or not there is an immense amount of explicit wrongdoing identified the public perception of offshore tax havens and shell companies continues to take on a negative light.

Based on current regulatory expectations for financial institutions and other structural and market trends such as the digital experience, new and evolving risks, increased competition, technological progress, growth of data, and the need to make better decisions and control costs the entity due diligence process will be drastically different than it is today for the financial services industry.

The Murder of a Journalist

The Panama Papers are still having cascading effects across the globe and one of the most tragic examples of this in 2017 was the assassination of the Maltese journalist, Daphne Caruana Galizia, by a car bomb. Mrs. Galizia was a harsh critic of Maltese political figures accusing some of corruption and international money laundering much of which was revealed by the Panama Papers. She also highlighted the links between Malta’s online gaming industry and the mafia. The only other journalist killed in the EU during 2017 was Kim Wall who was allegedly killed and dismembered by the Danish inventor, Peter Madsen.

The difference between the murder of the two journalists, was that in the case of Mrs. Galizia, there is a strong indication that her reporting on corruption and money laundering could be the underlying motive for her death. However, the death of Mrs. Wall appears to be more random and unplanned event as the circumstances that led up to her death are still unclear.

According to the Committee to Protect Journalists (CPJ) there were 248 journalists killed since 1992 who reported on corruption and only 7 of them happened in the EU. The top 5 countries for murdered journalists, who reported on corruption, since 1992 was the Philippines, Brazil, Colombia, Russia, and India totaling 34, 26, 24, 21, and 17 respectively.

Outside of reporting on corruption, one of the main drivers of murdered journalists in Europe since 1992 to the present, was war and terrorism which makes the case of Mrs. Galizia, all the more shocking. The war in Yugoslavia created a high-risk reporting environment for journalists and left 23 of them dead. On January 7, 2015, two brothers marched into the Charlie Hebdo offices and massacred 12 people, 8 of them journalists, which was the worst attack against the Western media since 1992.

Source: http://www.voxeurop.eu/en/2017/freedom-press-5121523

The worst attack against the media worldwide in recent memory occurred in the Philippines in 2009, when gunmen killed 32 journalists and 25 civilians in the Maguindanao province which is a predominantly Muslim region of Mindanao. Terrorism was the common factor that linked the Charlie Hebdo attack and the Maguindanao massacre. However, there is another factor common factor that underlies the murder of Mrs. Galizia in Malta, the Charlie Hebdo journalists in France, and the reporters in the Philippines which is that terrorism and corruption can be linked to shell companies.

This is not to say that any of those specific attacks were explicitly linked to use the of shell companies, but there is a common theme that criminals, corrupt politicians, and terrorists use shell companies as a tool to hide their identities as exposed by the Panama Papers.

There is already action being taken by the European Parliament as it passed a motion in November 2017, stating that Malta’s country police and judiciary “may be compromised.” The murder of this journalist is extremely tragic and it could prompt more aggressive moves from the EU to get its member states up to regulatory standards, especially in the area of beneficial ownership.

It could be argued that the EU is only as strong as its weakest link, so can the EU accept its members exhibiting low standards of justice and law enforcement?

Source: Bureau Van Dijk - A Moody’s Analytics Company

Regulations Coming into Force in 2018

There are three important financial service regulations coming into force in 2018 which are the CDD final rule in the United States, PSD2 in the European Union, and the NYDFS risk based banking rule in New York.

The CDD final rule was on the US legislative radar for some time, but it appears the Panama Papers expedited its approval. While the CDD final rule is a step in the right direction it does place additional burdens on financial institutions without addressing other issues such as specific states in the US still allowing companies to incorporate without collecting and verifying beneficial ownership.

The reported titled Global Shell Games: Testing Money Launderers’ and Terrorist Financiers’ Access to Shell Companies, stated that, “It is easier to obtain an untraceable shell company from incorporation services (though not law firms) in the US than in any other country, save Kenya.” There are two bills that were introduced to the Senate and Congress which are supposed to address the weak state corporate formation laws and this will be discussed in a later section.

PSD2 is one of the most unique financial regulations because it is arguably one of the few instances where legislators adopted a proactive as opposed to reactive regulatory framework. The motivations for the regulation were to increase market competition, consumer protection, and the standardization of infrastructure in the payments sector by mandating banks create mechanisms for third party providers (TPP) to access customer’s bank information. What’s interesting about this regulation is that it will have a significant impact on the market, but there wasn’t a discernible negative event which drove its adoption.

Instead the regulation is forward looking, as its supporters realized, quite astutely, that incredible amounts of change are on the horizon for the financial services sector due to technological disruptions. Since, EU regulators can see the future coming, to a certain degree, they have enacted legislation to help shape the future of payments with sensible regulations which will help increase standardization, security, and consumer protection.

The NYDFS risk based banking rule is also very interesting because it introduces more accountability on New York regulated financial institutions’ senior leadership to certify the institution’s AML program. The rule highlights that the reviews it conducted have identified many shortcomings in transaction monitoring and filtering programs and a lack of governance, oversight, and accountability.

Customer Due Diligence (CDD) Final Rule

In the US, the long awaited CDD final rule adds the fifth pillar to an effective AML program. In reality, many financial institutions have already integrated CDD into their overall AML program. The other four pillars of an effective AML framework, which are built upon an AML risk assessment, are: internal controls, independent testing, responsible individual(s), and training personnel.

Source: Data Derivatives

For example, in terms of transaction monitoring some banks would use the risk rating of a customer as another way to prioritize their alert queues. In other words, a customer which is perceived by the institution as being higher-risk would have their activity investigated first when compared to similar activity of a lower-risk client.

One of the major impacts to financial institutions in 2017 was the preparation to comply with the new beneficial owner requirements for the impending May 11, 2018 deadline. There were definitely challenges to comply with this new rule from an operational perspective, especially at large banks, because of the siloed structure of client onboarding and account opening processes. Banks can have dozens of onboarding and account opening systems and trying to coordinate where the know your customer (KYC) process is for a particular customer, at a given point in time, and when the handoff to the next system happens, can be extremely challenging.

Also, the account opener certification created wrinkles in the operations process, and questions arose regarding how long a certification was active and if one certification could support multiple account openings.

Also, the CDD final rule is based on trusting the customer, but as discussed in a recent article, in the Journal of Financial Compliance (Volume 1 Number 2), by the Head of Financial Institutions & Advisory in North America for Bureau van Dijk - A Moody's Analytics Company, Anders Rodenberg, there are three inherent flaws of asking companies to self-submit beneficial ownership information which are:

lack of knowledge;

missing authority; and

no line of communication when changes happen.

While US banks would be in compliance with the rule if they simply collected beneficial ownership based on what their customers supplied, it may not accurate all of the time. This is why it would be prudent for a financial institution to collect beneficial ownership from the client, and also use a third party data source such as Bureau Van Dijk’s Orbis as another method of coverage and verification.

If, there was a gap between what the customer provided and what Orbis has then it could be a factor to consider when determining which customers should undergo enhanced due diligence (EDD). Also, the more beneficial owners identified, either disclosed or uncovered, creates an opportunity to screen more individuals through adverse media, politically exposed persons (PEP), and sanctions lists. This allows for greater confidence in a institution’s risk-based approach given the broad coverage.

Finally, financial institutions in the US and elsewhere should be monitoring global standards and consider that the CDD Final Rule or similar regulations could be amended from the trust doctrine to the trust and verify concept as detailed under the Fourth Anti Money Laundering Directive (AML4).

Sanctuary Corporate Formation States

There are two bills, aimed at addressing beneficial ownership gaps in the US, that were introduced to the Senate and Congress which are the True Incorporation Transparency for Law Enforcement (TITLE) Act (S. 1454) and the Corporate Transparency Act (H.R. 3089/S. 1717). Both proposals cite similar findings of how criminals can exploit weakness in state formation laws. The TITLE Act specifically mentions the Russian arms dealer, Victor Bout, who used at least 12 companies in the US to, among other things, sell weapons to terrorist organizations trying to kill US citizens and government employees. Additionally, the TITLE Act refers to other major national security concerns such as Iran using a New York shell company to purchase a high-rise building in Manhattan and transferring millions of dollars back to Iran, an Office of Foreign Asset Control (OFAC) sanctioned country, until authorities found out and seized the building.

Both bills have reasonably good definitions of beneficial ownership and the requirements to collect identifiable information. Also, both bills allow up to $40 million dollars available for implementation costs with the new rules which will be funded by asset forfeiture funds accumulated from criminal prosecutions. There are common sense proposals in both bills such as exemptions for publicly traded companies, companies with a physical presence, minimum number of employees, and minimum annual revenue.

The one distinction between the two bills is that the TITLE Act requires states to comply with the bill, but it doesn’t penalize states if they fail to comply. If, history is any guide then the TITLE Act could lead to a scenario similar to sanctuary cities, where local city governments fail to cooperate with federal immigration authorities.

If, the TITLE Act was adopted then how do we know that states such as Delaware will comply? Could the US could end up with ‘sanctuary corporate formation states’?

The Corporate Transparency Act takes a slightly different approach where the states are not required to collect beneficial ownership, but if states are in noncompliance with the statue then companies incorporating in those states are required to file their beneficial ownership information with FinCEN. While it could be argued this bill is slightly better than the TITLE Act, its not clear how FinCEN will monitor and enforce companies out of compliance with this regulation.

The ideal scenario for the US would be that all states collect beneficial ownership in the same standardized fashion or in another extreme example, if some states fail to comply, then the federal government would take over the authority to form corporations and remove the rights from states. The latter scenario would not be ideal and would be very unlikely to get passed by the Congress and the Senate unless there were some extreme circumstances, possibly equivalent to another 9/11 terrorist attack tied to the use of shell companies in the US. There would be significant economic and social implications of such a change so it appears that beneficial ownership doesn’t have enough political capital to initiate such a drastic move.

It also gets into the nuts and bolts of legal theory. Would the supremacy clause of the US constitution hold up or would states try to nullify federal law, arguing that it was unconstitutional?

Payment Services Directive (PSD2)

On October 8, 2015 the European Parliament adopted the European Commission’s updated PSD2. The regulation is ushering in a new era of banking regulations which is positioned to increase consumer protection and security through innovation in the payments space. The idea is that consumers are the rightful owners of their data, both individuals and businesses, and not the banks. The regulation mandated that banks provide payment services to TPP via an application programming interface (API) by January 13, 2018.

Essentially, PSD2 will allow TPP to create customer centric and seamless interfaces on top’s of banks operational infrastructure. On a daily basis individual and business customers interact with various social and messaging platforms such as Facebook, LinkedIn, WhatsApp, Skype, etc. This regulation will allow a whole array of TPP to enter the payments space accessing banking data of their loyal customer base and even initiate payments on the their behalf assuming the consumer already gave authorization to do so.

Consumers are demanding real time payments as many other parts of the digital experience is real time, so why should it be any different for payments? Financial institutions have made some progress in the payments space as evident by the successful launch and market penetration of Zelle backed by over 30 US banks. Zelle allows customers of participating banks to send money to another US customer, usually within minutes, with an email address or phone number.

The integration is rather seamless because Zelle, can be accessed through the customer’s existing mobile banking application and a separate Zelle application doesn’t have to be downloaded, but it’s now being offered for customers of non-participating banks. It appears that the launch of Zelle, which required extensive collaboration of many leading US banks, was initiated by the risk, some challenger payment providers such as Venmo and Square Cash posed to the industry.

The European Parliament astutely realized that to encourage innovation and competition in the payments space, new rules would need to be enacted. The fact that PSD2 was initiated by the government actually strengthens cybersecurity as opposed to diluting it. The reason is that whether we like it or not, innovation will march on and new FinTech players will continue to emerge. Since, the European Parliament is taking a proactive role in the evolution of the payments space it allows the industry as a whole to think about best practices for security and authentication.

PSD2 is requiring strong customer authentication (SCA) which falls into three basic categories such as:

Knowledge (e.g. something only the user knows)

Possession (e.g. something only the user possesses)

Inherence (e.g. something the user is)

The third category, inherence, opens up one of the most promising applications of artificial intelligence which is biometric authentication, but more specifically facial recognition. This will apply to both individual customers and entities because financial institutions may start to store biometric data of executives who are authorized to perform specific transactions. Biometrics and how it could impact entity due diligence will be discussed later in this paper.

New York State Department of Financial Services (NYDFS) Risk Based Banking Rule

The NYDFS risk based banking rule is requiring covered institutions to certify they are in compliance with the regulation by April 15, 2018. The rule is the first of its kind and identifies the key components of effective transaction monitoring and filtering programs. As mentioned earlier, this rule has highlighted the need for senior management to have greater governance, oversight, and accountability into the management of the transaction monitoring and sanctions screening programs of their institution.

Some of the requirements are straightforward and well known in the industry such as conducting a risk assessment and ensuring a robust KYC program is in place. However, this rule is unique in the sense, that it highlights very specific system, data, and validation requirements. Transaction and data mapping were highlighted as key activities which are specific to the implementation of transaction monitoring and sanctions screening systems.

The focus on data and sound quantitative methods are not new from a regulatory perspective, because many of the requirements in the NYDFS rule can be found in the Office of the Comptroller of the Currency’s (OCC) paper, Supervisory Guidance on Model Risk Management. The OCC’s paper has traditionally applied to market, credit, and operational risks such as ensuring the financial institution is managing its risk properly to ensure it has enough capital to satisfy reserve requirements and has conducted sufficient stress testing to ensure different market scenarios can be endured.

Many of the high dollar enforcement actions by the NYDFS have involved foreign banks and specifically cite the AML risk of correspondent banking. There have been several phases to the enforcement actions where some banks have actually engaged in systemic wire-stripping, deleting or changing of payment instructions, to evade US sanctions. As large financial institutions have moved away from these practices the enforcement actions have cited deficiencies in other aspects of the institution’s AML program.

This brings up back to a common theme of CAS. For anyone, who has worked with many aspects of an institution’s AML program, it’s complex. It's not only the systems which are supposed to screen for sanctions and monitor suspicious activity because there are other factors at play such as the interactions between interdepartmental staff, vendor management, emerging financial crime trends, new regulatory rules, staff attrition, siloed information technology (IT) systems, general technology trends, among other things.

In a sense, one interpretation of what the NYDFS rule says, at its essence, is that institutions need to do a better job at managing complexity. And to manage complexity effectively, in complex organizations, support is needed from senior leadership so requiring the annual certification does make sense.

Derisking and Hawala Networks

The interesting part of enforcement actions is that it has led to derisking of correspondent banking relationships by US and European banks to reduce the risk of severe financial penalties and reputational damage. According to a 2017 Accuity research report, Derisking and the demise of correspondent banking relationships, there has been a 25% decrease in global correspondent banking relationships largely due to strategies executed by US and European banks.

This has reportedly left a huge window of opportunity for other countries to move in, and China has been at the forefront by increasing its correspondent banking relationships by 3,355% between 2009 and 2016. The reduction in correspondent banking relationships increases the pressure for individuals and businesses in emerging markets to seek out alternative methods of finance which could increase the power of criminal groups and other nefarious actors offering shadow banking services. The Global Head of Strategic Affairs, Henry Balani opined on the consequences of derisking by stating, “Allowing de-risking to continue unfettered is like living in a world where some airports don’t have the same levels of security screening – before long, the consequences will be disastrous for everyone.”

There are informal money transfer systems outside the channels of traditional banking such as hawala in India, hundi in Pakistan, fei qian in China, padala in Philippines, hui kuan in Hong Kong, and phei kwan in Thailand. Hawala is an informal money transfer system that has been used for centuries in the Middle East and Asia, and facilitates money transfer without money movement through a network of trusted dealers, hawaladars. The origins of Hawala can be found in Islamic law (Sh’aria) and is referred in texts of Islamic jurisprudence in the early 8th century, and is believed to have helped facilitate long distance trade. Today, hawala or hundi systems can be found in parallel with traditional banking systems in India and Pakistan.

According to a report issued by FinCEN, Hawala transactions have been linked to terrorism, narcotics trafficking, gambling, human trafficking, and other illegal activities. In John Cassara’s book, Trade-Based Money Laundering: The Next Frontier in International Money Laundering Enforcement, he cites evidence has shown that funding for the 1998 US embassy bombing in Nairobi was through a hawala office in its infamous Eastleigh neighbourhood, an enclave for Somali immigrants.

In 2015 it was reported that across Spain, groups such as ISIS and the al-Qaeda-affiliated Nusra Front are funded through a network of 250 to 300 shops - such as butchers, supermarkets and phone call centres - run by mostly Pakistani brokers.

Could the decline of global correspondent banking relationships strengthen informal remittance systems such as Hawala because the average business or person in an impacted geographic area or industry has fewer options now?

This is not to say that US and European regulators should have not aggressively enforced financial institutions compliance failures, but they may need to reevaluate their strategies going forward to ensure the integrity of the global financial system without causing the unintended, and sometimes hard to predict, consequences of derisking. It’s not only about ensuring the prevention of terrorist financing, but it's also about allowing access to the global financial system to law abiding businesses and citizens so they don’t have to use shadow banking networks which can strengthen various aspects of black markets and criminal networks.

Correspondent banking became increasingly costly because the correspondent banks started to reach out to their customers (respondent banks) to get more information about their respondent banks’ customers. The correspondent bank would contact the respondent bank through a request for information (RFI) to understand who the customer was and the nature of the transaction. This created a long and laborious process for correspondent banks to complete and the small revenues generated didn’t justify the operational costs and compliance risks of serving high-risk jurisdictions and categories of customers.

One of the main components of the US Patriot Act was the customer identification program (CIP), but in the case of correspondent banks, how do they really know if the respondent banks are conducting effective due diligence on their own customers? While it wasn’t the correspondent banks responsibility to verify the identity each and every one of the respondent bank’s customers, during some AML investigations, at certain banks, it almost went to that length.

This brings up an interesting question about innovation in the correspondent banking space, and if there could be a way for respondent banks to verify their own customer’s identity and somehow share it with their correspondent banks? A number of problems arise with this such as a data privacy, but there have been companies exploring this possibility to prove, to a certain extent, that respondent banks due diligence procedures are robust and accurate.

The Financial Action Task Force (FATF) has stated that, “de-risking can result in financial exclusion, less transparency and greater exposure to money laundering and terrorist financing risks.” If punitive fines against financial institutions by regulators was a major contributor to the derisking phenomenon, then what is the role of regulators in supporting innovation to reverse the effects of derisking to ensure the integrity and transparency of the global financial system?

Regulatory Sandboxes

Regulatory sandboxes may be one of the key ingredients needed for compliance departments to innovate, especially in the financial services sector. Financial institutions are under severe scrutiny for money laundering infractions and the United States regulatory regime is notoriously punitive based on the amount of fines levied against institutions which failed to comply.

It was reported that HSBC Holdings engaged the artificial intelligence (AI) firm, Ayasdi, to help reduce the number of false positive alerts generated by the bank’s transaction monitoring system. According to Ayasdi, during the pilot of the technology HSBC saw a 20% reduction in the number of investigations without losing any of the cases referred for additional scrutiny.

The bank’s Chief Operating Officer Andy Maguire stated the following about the anti-money laundering investigation process:

“the whole industry has thrown a lot of bodies at it because that was the way it was being done”

HSBC is one of the world’s largest banks which was fined $1.92 billion in 2012 by U.S. authorities for allowing cartels to launder drug money out of Mexico and for other compliance failures.

So, why would HSBC be one of the first banks to test out an unproven technology and vendor in an area with so much scrutiny and risk?

First, it's worth noting that the pilot took place in the United Kingdom and not the United States.

While there are many factors at play in a bank’s decision making process, clearly the regulatory sandbox offered by the United Kingdom’s Financial Conduct Authority (FCA) could have been a key factor in piloting the new technology.

In November 2015, the FCA published a document titled the ‘Regulatory Sandbox’ which describes the sandbox and the regulator’s need to support disruptive innovation. The origin of the regulatory sandbox goes beyond supporting innovation because the FCA perceives their role as critical to ensure the United Kingdom’s economy is robust and remains relevant in an increasingly competitive global marketplace. The FCA’s perception of their essential role in the continued growth and success of the United Kingdom’s financial services sector is succinctly summarized below:

The FCA is not the only regulatory regime to discuss the importance of innovation as government officials in other countries have publicly discussed technology as a means to advance various functions within the economy. On September 28, 2017, at the Institute of Singapore Chartered Accountants’ Conference, the Deputy Prime Minister Of Singapore, Teo Chee Hean, made the following comments about the use of technology in the fight against transnational crime.

Mr. Teo’s comments about transnational crime make sense because Singapore is arguably the safest country in Southeast Asia, and one of the safest countries in the world. Hence, Singapore’s touchpoint with crime is at the transnational level, in the form of trade finance and other financial instruments through its extensive banking system.

Singapore also has its own regulatory sandbox and on November 7, 2017 just on the heels of Mr. Teo’s comments, it was reported that OCBC engaged an AI firm which helped it reduce its transaction monitoring system alert volume by 35%.

It's not only financial institutions that are experimenting with AI to fight financial crime because the Australian Transaction Reports and Analysis Centre (AUSTRAC) collaborated with researchers at RMIT University in Melbourne to develop a system capable of detecting suspicious activity for large volumes of data.

Pauline Chou at AUSTRAC indicated that criminals are getting better at evading detection and the sheer transaction volume in Australia requires more advanced technology. Ms. Chou told the New Scientist: "It's just become harder and harder for us to keep up with the volume and to have a clear conscience that we are actually on top of our data."

It’s worth noting that the Australia supports its own innovation hub and sandbox to explore the prospects of fintech, but at the same time do a better job at combating financial crime, terrorist financing, and various forms of organized crime.

On October 26, 2016 the Office of the Office of the Comptroller of the Currency (OCC) announced it would created an innovation office to support responsible innovation. While several countries have embraced the notion of a regulatory sandbox, the United States regulators are preferring to use different terminology to ensure financial institutions are held responsible for their actions or lack thereof. The OCC Chief Innovation Officer Beth Knickerbocker was quoted as saying that the OCC prefers the term “bank pilot” as opposed to “regulatory sandbox,” which could be misinterpreted as experimenting without consequences.

According to a paper published by Ernst and Young (EY), China has leapfrogged other countries in terms of fintech adoption rates which is mainly attributed to a regulatory framework that is conducive to innovation.

As discussed financial institutions are beginning to leverage AI to reduce false positive alerts from transaction monitoring systems and identify true suspicious activity. The same concept also applies to the future of entity due diligence. As new technology is developed to simplify and optimize the due diligence process, then organizations which operate within jurisdictions that support innovation will be more likely to be the early adopters and reap the benefits.

Digital Transformation

It’s hard to imagine how to survive a day without our phones as they have become such integral parts of our daily lives. Being able to send an email, make a phone call, surf the internet, map directions, and watch a video all from one device that fits into our pockets is somewhat remarkable. At the same time its somewhat expected given computing power has doubled every two years according to Moore’s law. It’s not only mobile phones because we can see the digital transformation everywhere, even in the public sector which can be slow to adopt new technology.

The Queens Midtown Tunnel that connects the boroughs of Queens and Manhattan in New York has converted to cashless tolling joining the ranks of other US cities in California, Utah, and Washington. Cashless tolling is a large upfront cost to implement the necessary systems to support the process and there are construction changes needed to renovate the infrastructure. Also, other bridges have reported a spike in uncollected tolls and a loss in revenue after going cashless.

However, there are significant benefits such as reducing traffic congestion in the city which helps alleviate some of the air pollution from idling vehicles. Also, the staff needed to facilitate the collection, transportation, and computation of bills and coins is a high administrative cost for the city. This highlights concerns many people share, which is that automation and technological innovation in general will take jobs away from people, but legislators believe the advantages of cashless tolling outweigh the disadvantages in the long-term.

Similarly, as we go through various digital transformations as individuals, businesses need to follow suit or potentially be disrupted given that consumer expectations are evolving, or, at the very least, open to easier ways of doing things. Uber is a strong example, along with other assetless firms, of a company that leveraged technology to completely disrupt a market such as the taxi industry which has historically been fairly stagnant.

In 2001, 2006, and 2011, there was only one technology company, Microsoft or Apple, listed as one of the top 5 companies in the US by market capitalization. In 2016, only 5 years later, all 5 companies were technology companies which clearly shows the speed at which times have changed. The Economist published an article, “The world’s most valuable resource is no longer oil, but data,” which highlights that all 5 of those companies have created elaborate networks, based on physical devices, sensors or applications, to collect enormous amounts of data.

Source: http://www.visualcapitalist.com/chart-largest-companies-market-cap-15-years/

Google, a subsidiary of Alphabet, has a major source of its revenue that comes from advertising. The reason Google is able to deliver so much economic value is the massive amounts of data collected on users and leveraging advanced machine learning algorithms to make relevant and meaningful ad recommendations based on the user’s preferences while surfing the internet. Google has fundamentally transformed the advertising industry because all of their metrics can be measured and tracked as opposed to traditional forms of advertising media such as print, television, and radio which can be measured to a degree, but not to the extent to know if the user acted upon the ad or not.

The Rise of Alternative Data

The natural byproduct of governments, companies, and people going through these various forms of digital transformations is that it creates enormous amounts of data. According to research conducted by SINTEF ICT in 2013 it was estimated that 90% of the world’s data has been created in the last two years.

Dr. Anasse Bari, a New York University professor, contends that the big data and deep learning are creating a new data paradigm on Wall Street. Dr. Bari explains that diverse data sets such as satellite images, people-counting sensors, container ships’ positions, credit card transactional data, jobs and layoffs reports, cell phones, social media, news articles, tweets, and online search queries can all be used to make predictions about future financial valuations.

Source: https://www.promptcloud.com/blog/want-to-ensure-business-growth-via-big-data-augment-enterprise-data-with-web-data/

For example, data scientists can mine through satellite images of the parking lots of major retailers over a period of time to predict future sales. Professor Bari was part of a project that analyzed nighttime satellite images of the earth as a way to predict the gross domestic product (GDP) per square kilometer. The theory was that the greater the amount of light in a given area would infer a higher GDP.

Many institutions have already incorporated social media as an alternative data source for AML and fraud investigations and even for merger and acquisitions (M&A) deals. During the analysis phase of a M&A deal, social media could be one of the data sources to leverage by conducting a sentiment analysis of the target company. Sentiment analysis is type of natural language processing (NLP) algorithm which can determine, among other things, how people feel about the company. Some of the insights that could be derived is that people love their products or have similar complaints of poor customer service which may have an impact on the final valuation.

Sentiment analysis can be a particularly powerful analysis method which was illustrated by an anecdote in James Surowiecki’s book, The Wisdom of Crowds. The story was that in 1907 Francis Galton realized that when he averaged of all the guesses for people participating in the weight of an ox competition at a local fair, it was more accurate than individual guesses and even supposed cattle experts. The method was not without its flaws though, because if people were able to influence each other’s responses then it could skew the accuracy of the results. However, it does point to the powerful insights that could be extracted from social media platforms which have large crowds.

Another potential area for alternative data in the financial services sector is a network of nano satellites that leverage synthetic-aperture radar technology that, among other things, track ships on Earth. ICEYE is a startup that has raised funding to launch a constellation of synthetic-aperture radar enabled satellites which can observe the Earth through clouds and darkness that traditional image satellites can’t do.

Source: ICEYE - Problem Solving in the Maritime Industry

Trade finance is one of the key components which facilitates international trade supported by financial institutions. One component of trade finance is that many goods travel from one country to another on vessels. North Korea’s aggressive missile tests and nuclear ambitions are creating a volatile political environment and increasing the focus and exposure of sanctions risk. Financial institution don’t want to be linked to vessels dealing with North Korea, but it can be hard to know, in some circumstances, for sure by limiting the due diligence process to traditional data sources.

Marshall Billingslea, the US Treasury Department’s assistant secretary for terrorist financing, explained to the US House of Representatives Foreign Affairs Committee that ships turn off their transponders when approaching North Korea, stock up on commodities such as coal, then turn them back on as they sail around the Korean peninsula. The ships would then stop at a Russian port to make it appear that the ships contents came from Russia, and then sail back to China. The other challenge is that North Korea doesn’t have radar stations that feed into the international tracking systems. This type of satellite technology could be used by multiple stakeholders to help identify what vessels behave suspiciously near North Korea and could, to a certain extent, make sanctions more effective by applying pressure on noncompliant vessels.

Source: https://www.businessinsider.nl/north-korea-why-un-sanctions-not-working-2017-9/

A recent report by the US research group C4ADS highlights another tactic that North Korea uses to evade sanctions, which is to create new webs of shell and front companies to continue operations. This sentiment is echoed in an October 13, 2017 report by Daniel Bethencourt of Association of Certified Anti-Money Laundering Specialists (ACAMS) MoneyLaundering.com North Korean front companies “use a series of perpetually evolving sanctions-evasion schemes” to continue its proliferation of a the nuclear weapons program.

Changing Consumer Expectations

The new paradigm for the customer experience is Disney World. For anyone who has travelled to Disney World recently, the MagicBand sets the bar for our expectations as consumers. The MagicBand is based on radio-frequency identification (RFID) technology and is shipped to your home for your upcoming trip and can be personalized with names, colors, and other designs for everyone in your party.

The MagicBand can be used to buy food and merchandise, enter your hotel room, lookup photos taken by Disney staff, and even enter your preselected rides. There are water rides at Disney so last thing you want is going on one of those and having your belongings get soaked. But the idea is to make the experience personalized, frictionless, and pleasurable. Since, companies are merely a group of individuals then as our individual expectations change so will our organizational ones, even if it happens, in some firms, at a slower pace.

The speed at which consumers are adopting new technologies has advanced rapidly when examining the historical penetration of other technologies such as the electricity, and the refrigerator. The adoption rate of the cell phone and internet exhibits a steeper incline in a shorter timeframe than earlier technologies as seen in the image below.

Source: https://hbr.org/2013/11/the-pace-of-technology-adoption-is-speeding-up

Bank in a Phone

While banks have had various levels of success with digital transformation, there is one that stands out from the rest of the pack. DBS bank launched Digibank in India in April 2016 and in Indonesia in August 2017 which is basically a bank in your phone. As a testament of the bank’s success in August 2016, at a Talent Unleashed competition, DBS’ chief innovation officer, Neal Cross, received the most disruptive Chief Innovation Officer (CIO)/ Chief Technology Officer (CTO) globally. Mr. Cross was judged by prominent figures business such as including Apple co-Founder Steve Wozniak and Virgin Group Founder, Sir Richard Branson,

Source: https://www.dbs.com/newsroom/DBS_Neal_Cross_recognised_as_the_most_disruptive_Chief_Innovation_Officer_globally

DBS’ strategy with Digibank is succinctly summarized by its chief executive executive, Piyush Gupta, in the below quote:

While Digibank is targeted at the retail banking in emerging markets, it sets a clear precedent of what’s to come in the future, even for institutional banking in the developed economies. For example, as the customer service chatbots functionality advances then they will begin to be deployed by banks as an option to serve large banking customers. While some customers will prefer to speak to an actual person, the fact that these chatbots are serving those same people in other sectors and businesses will drive the adoption for financial institutions because of changing customer behavior.

This has also has had profound effects on the financial services industry which, in some sense, is struggling to keep up with the fervent pace of the digitalisation across various aspects of its operations.

Self Service in Spades

Self-service machines are not something new as we have been interacting with automatic teller machines (ATM) for years. The first ATM appeared in Enfield, a suburb of London, on June 27, 1967 at a branch of Barclay’s bank. An engineer, John Shepherd-Barron, at a printing company is believed to have come up with the cash vending machine idea and approached Barclay’s about it.

The first ATMs were not very reliable and many people didn’t like them, but banks continued to install them despite the lack of customer satisfaction. In the United Kingdom in the 1960s and 70s, there was growing pressure from the trade unions for banks to close on Saturdays. Hence, the executive leadership of banks seemed to think that ATMs were a good idea to appease the unions and customers and to reduce labor costs. This is another historical example of how different agents of a CAS act, react, and learn from each other and how automation was seen as a remedy for all those involved.

This brings up a number of points of about the transformative effect of new technology. First, ATMs are densely present in most major cities today and their transformative effect on us has become almost invisible. Second, it seems that most bank employees were not concerned about getting replaced by an ATM. How could they? It performed so poorly and couldn’t do the same type of quality job as a human bank teller, at least at that time.

After society accepted ATMs as a part of banking, did we even notice as they got better?

Some pharmacies, in low traffic areas, today will only have one employee working in the front of the store acting like an air traffic controller, directing customers to self-service checkout systems. The self-service systems in most pharmacies today is similar to ATMs in the sense that they have predefined responses and can’t learn or are not embedded with intelligence. These self-service systems can only give feedback based on the user’s physical interaction with the system usually via a touch screen as opposed to a customer’s voice. Occasionally, the employee will intervene if the customer attempts to buy an age-restricted item, but many times it's possible to complete a transaction with no human interaction at all.

A similar transformation will continue to evolve in the entity due diligence space where the consumer, or the account opener, is directed to a system and guided through the onboarding process by a series of prompts. The self-service onboarding systems are already beginning to emerge by prompting customers to update their information based on policy timeframes, refreshing expired documents or reverifying information required for the bank to conduct its periodic review, if applicable. While this trend appears to be strongest in the retail banking market right now, it will certainly become the standard for commercial and wholesale banking in the future, it has to.

The Rise of Non-documentary Evidence

As discussed in previous sections consumer expectations are changing and the new customer experience model is Disney’s MagicBand. Additionally, financial institutions can develop platforms to initiate their customer base to get more engaged in the due diligence process by refreshing their own documentation collected for regulatory purposes. However, as highlighted earlier regulatory expectations are likely to increase over time which means that offloading all of the heavy lifting to the client is not the best long-term strategy.

Hence, other activities need to be planned to reduce the amount of work the customer has to do on their own and continue to evolve the due diligence process so it becomes as seamless as possible. There are other factors at play as well such as the risk of losing customers to other more mature digital banks with better onboarding processes or even losing business to fintechs. Again, fintechs are taking advantage of the current regulatory environment which puts enormous regulatory pressure on depository institutions that creates an opportunity for them to offer snap-on financial products linked to a customer’s bank account.

Another strategy that can be developed, in the US and other countries, for the due diligence process is to leverage non documentary methods of identification as described in the Federal Financial Institutions Examination Council (FFIEC) Bank Secrecy Act (BSA)/Anti-Money Laundering (AML) Examination Manual.

The determination of what data could satisfy non documentary needs and when to require a potential client to supply the actual document could follow the risk-based approach. For example, if during onboarding the customer triggers the EDD process based on the institution's risk rating methodology then the physical documents could be requested. On the other hand, if a potential customer is onboarded and is determined to be low-risk then this could allow for more flexibility when satisfying the policy requirements, including using non-documentary evidence.

As will be discussed later in this document there will be opportunities to leverage the advances in robotic process automation (RPA) and AI to further streamline the due diligence process by automating specific portions of it.

Emerging Risks

There are many emerging risks for financial institutions as the transformative effects of the digital age continue to shape and reshape the world, business, consumer expectations, risk management, etc. In the OCC’s Semiannual Risk Perspective for Spring 2017 report, four key risk areas were highlighted including strategic, loosening credit underwriting standards, operational (cyber), and compliance.

The theme underlying all of these key areas was the influence of digitalisation which amplified the complex, evolving, and non-linear nature of risk. For example, while strategic risk and loosening credit underwriting standards were listed separately, one leads to the other. In other words, strategic risk was created by nonfinancial firms, including fintech firms, offering financial services to customers which forced banks to respond and adjust credit underwriting standards in an attempt to retain market share.

Cyber risk wasn’t a result of the interactions between competitors, but something that arose out of the increased digitalisation of the modern world, including business processes. However, cyberattacks are successful because of the immense amount of human and financial capital invested into it by foreign governments and large networks of bad actors to ensure its continued success.

Financial Technology (Fintech)

Financial technology or fintech is not something new because innovation has always been a part of finance, but the what’s emerging is that an increasing number of firms are offering services based on a fintech innovation directly to customers and are threatening the traditional financial institutions market share.

The modern day multi-purpose credit card has its roots in a 1949 dinner in New York City, when the businessman, Frank McNamara, forget his wallet so his wife had to pay the bill. In 1950, Mr. McNamara and his partner returned to the Major's Cabin Grill restaurant and paid the bill with a small cardboard card, now known as the Diner’s Club Card. During the card’s first year of business, the membership grew to 10,000 cardholders and 28 participating restaurants and 2 hotels.

On February 8, 1971, the National Association of Securities Dealers (NASDAQ) began as the world’s first electronic stock market, trading over 2,500 securities. In 1973, 239 banks from 15 countries formed the cooperative, the Society for Worldwide Interbank Financial Telecommunication (SWIFT), to standardize the communication about cross-border payments.

There were other companies which built risk management infrastructure or data services to serve the financial services sector such as Bloomberg and Mysis.

The difference between the major changes of the past and today, was that in the past innovation came from within, and if it did come from an external company it was designed to support the financial services sector as opposed to threaten its market dominance. Today, some fintechs are looking to make the financial services sector more efficient with better software, but other firms are looking to take market share by offering complementary financial service products directly to consumers.

Arguably, the first signs that fintech could be in direct competition with the financial services sector was the emergence of Confinity, now PayPal, in 1998. Confinity was originally designed as a mobile payment platform for people using Palm Pilots and PDAs. The company was acquired by Ebay in 2002 to transform the business of payments. PayPal disrupted the sector by building a payments ecosystem to allow electronic funds transfer typically faster and cheaper than traditional paper checks and money orders.

The global financial crisis of 2008 was pivotal point in the history of fintech which laid the foundation for more challenger firms to begin entering the financial services space. The collapse of some major financial institutions such as Lehman Brothers, and the acquisition process to consolidate other major financial institutions in the US and Europe created negative public sentiment towards the industry and regulators demanded sweeping changes to ensure that type of crisis wouldn’t happen again.

Given the sheer magnitude of the 2008 financial crisis, many institutions were so focused on remediation efforts and meeting new regulatory demands and innovation was not a top priority. However, at the same time there were significant technological advances in smartphones, big data, and machine learning which allowed for fintechs to disrupt the sector - as it laid stagnant for a few years - with consumers more open to digital alternatives.

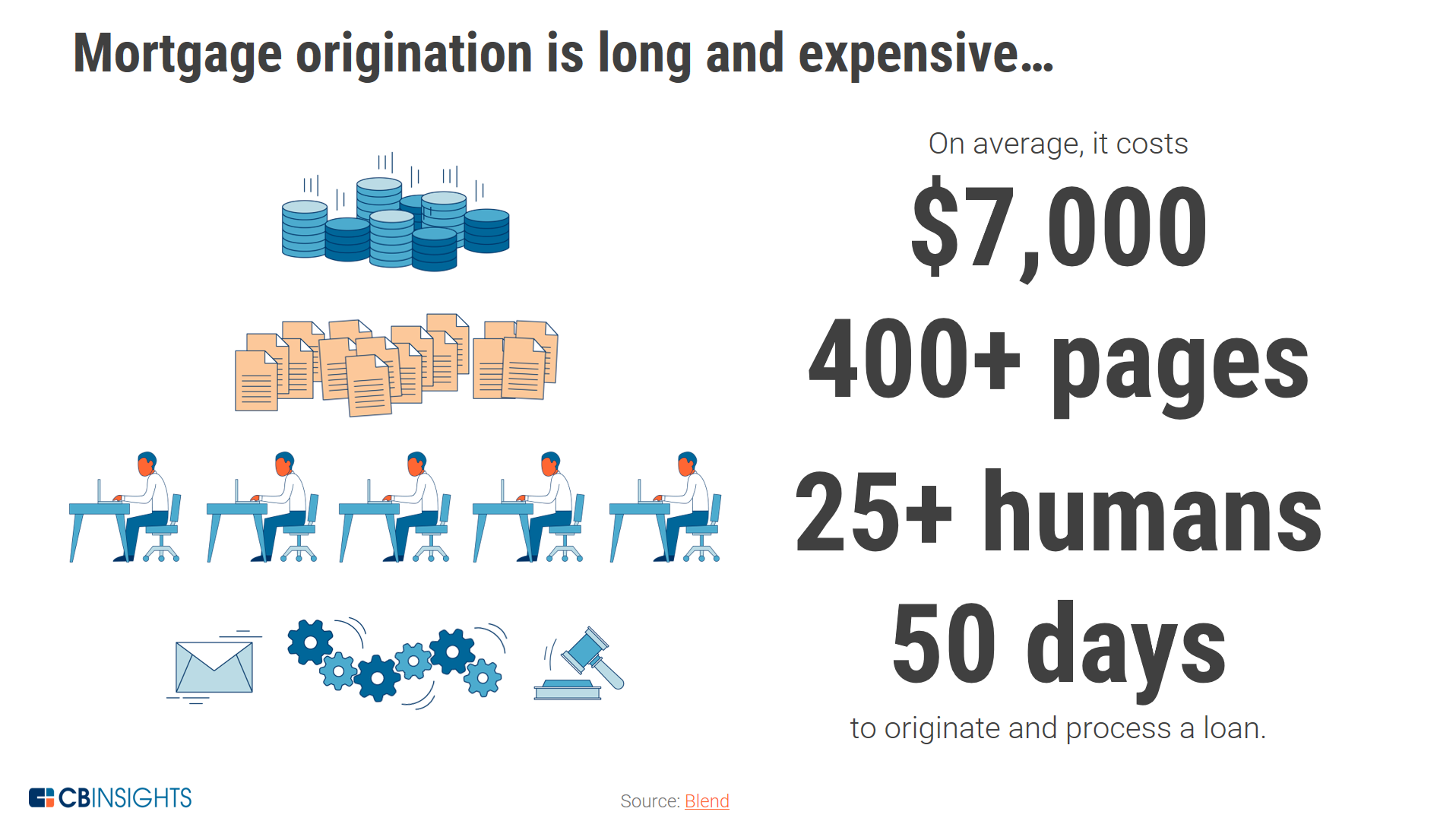

One financial service that is ripe for disruption is the mortgage loan process which is extremely laborious and, according to CB Insights, costs an average of $7,000, contains more than 400 pages of documents, requires more than 25 workers, and takes roughly 50 days to complete. Clearly, this is a concern and consumers may opt for nontraditional online lenders to avoid the painful experience in traditional banking.

According to a report by PricewaterhouseCoopers (PWC), large financial institutions across the world could lose 24 percent of their revenues to fintech firms over the next three to five years. Today, fintechs are focusing on a wide array of applications that can be categorised as consumer facing or institutional and includes services such as business and personal online lending, payment applications, mobile wallets, and robo advisors .

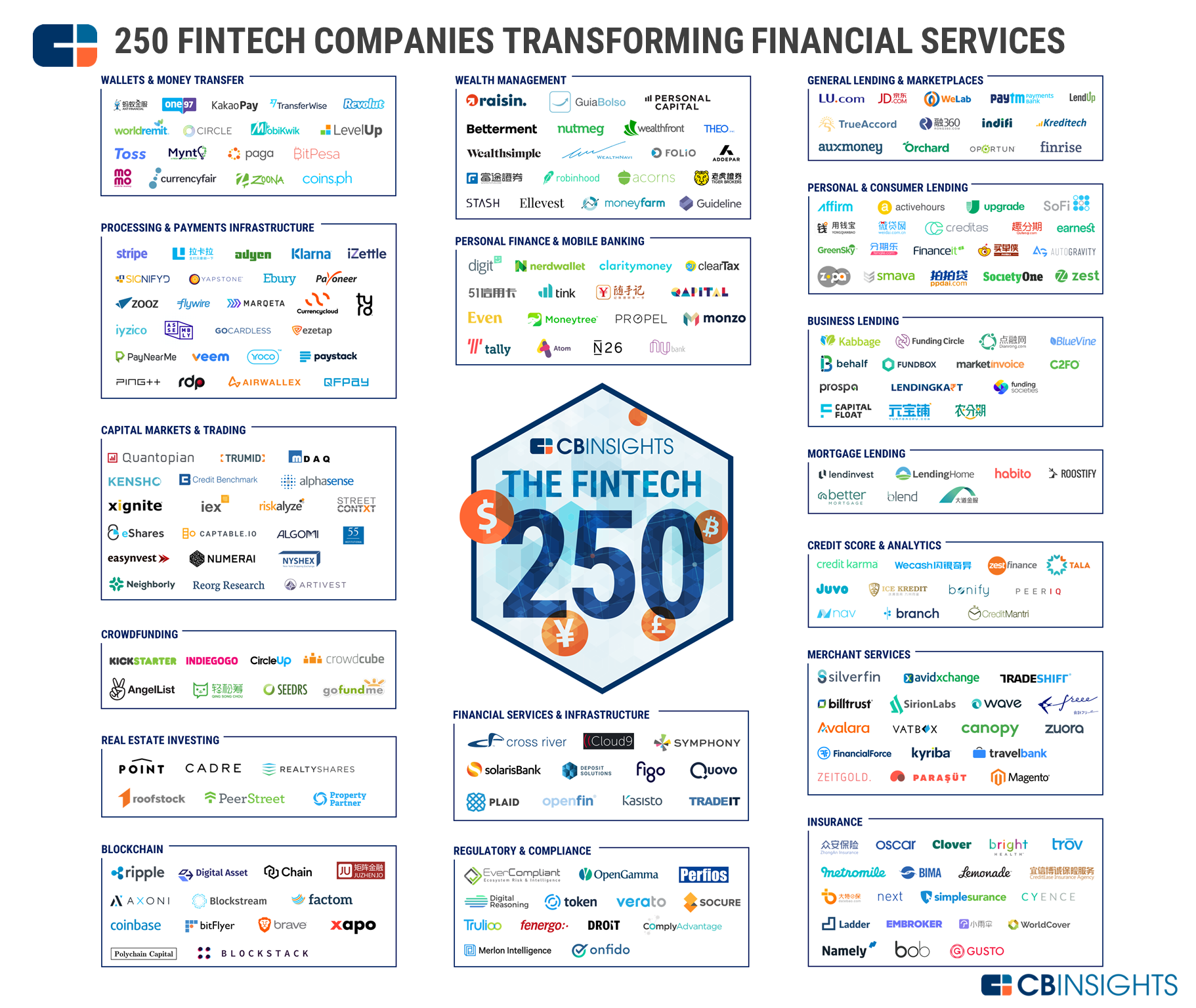

Some fintechs are marketing directly to consumers and taking advantage of the power of digital platforms and the current regulatory environment which puts enormous pressure on depository institutions. As discussed in the digital transformation section this creates an opportunity for fintechs to offer snap-on financial products linked to customer’s bank account without the regulatory burden of being a bank. CB Insights created the graphic below to highlight 250 fintech startups transforming financial services.

Source: https://www.cbinsights.com/research/fintech-250-startups-most-promising/

Financial institutions are not only in competition with fintechs for market share because they are also in a fight for talent with technology companies more generally, given the impacts of the digital era. The CEO of Goldman Sachs hosted an event in September 2017 for 250 students at a New York public college which traditionally wouldn’t have been on the prestigious firm’s recruiting radar. However, Mr. Blankfein stated the following about Goldman’s current outlook and modified talent sourcing strategy:

Some financial institutions are taking an alternative approach, rather than competing directly or doing nothing, by either investing in or partnering with fintech companies, and fewer have acquired fintechs directly. According to data compiled by CB Insights, since 2012 Citi has invested in 25 fintechs and Goldman Sachs in 22, while fintech acquisitions have been less frequent. However, in October 2017 JP Morgan Chase agreed to acquire the fintech company, Wepay, for $220 million.

Model Risk

The OCC’s paper, Supervisory Guidance on Model Risk Management, outlines an effective framework to manage model risk. The basic components for managing model risk can be broken into three parts which are:

Model development, implementation, and use

Model validation

Governance, policies, and control

As the digital era changes the way financial products and services are offered, it also changes the way risk is evaluated and managed. Again, while these robust frameworks have historically applied to market, credit, and operational risk. However, there is clearly a place for due diligence in both third party risk management and AML programs.

The irony about model risk here is that while new technologies will help automate repetitive tasks and advanced algorithms will assess various risks more accurately, it will greatly increase the complexity of the governance and validation processes. To calculate risk more effectively more external data sources will be interrogated by more complex algorithms. This will increase the risk management surface area, and determining where potential issues occur could become more cumbersome to identify and remediate.

Another emerging technology trend is regulatory technology or regtech. Regtech’s goal is to enable institutions to make better and more informed decisions about risk management and given them the capability to comply with regulations more efficiently and cost effectively. However, as discussed previously the threat posed by fintechs are putting pressure on financial institutions to loosen their credit underwriting procedures to avoid losing more market share. This trend will continue to drive the incorporation of more external data sources and more complex algorithms to arrive at more accurate assessments of risk, faster.

Financial institutions also have to deal with legacy IT systems which require data to flow through many toll gates before getting to the risk management systems. It’s akin to trying to fit ‘a square peg in a round hole’ which can result in various data quality issues. While adopting new technology can increase the overall complexity of the model risk management process, it could, at the same time, increase the framework’s overall effectiveness, when implemented properly.

Cyber Risk

Cyberattacks have been called one of the greatest threats to the United States, even beyond the risks that a nuclear North Korea may pose. 2017 has been a blockbuster year for data breaches and no organization seems to be immune from the threat.

Some high profile data breaches in 2017 include, but are not limited to entities such as:

IRS, Equifax, Gmail, Blue Cross Blue Shield / Anthem, U.S. Securities and Exchange Commission (SEC), Dun & Bradstreet, Yahoo, Uber, and Deloitte.

While the extent of the data breach varied for each organization, it's abundantly clear that cyberattacks are affecting a wide range of institutions in the public and private sector at an alarming rate.

The foreword to Daniel Wagner’s book, “Virtual Terror: 21st Century Cyber Warfare,” was written by Tom Ridge, the former Governor of Pennsylvania and First Secretary of the US Department of Homeland Security described the threat as follows:

“The Internet is an open system based on anonymity - it was not designed to be a secure communication platform. The ubiquity of the Internet is its strength, and its weakness. The Internet’s malicious actors are known to all of us via disruption, sabotage, theft, and espionage. These digital trespassers are motivated, resourceful, focused, and often well financed. They eschew traditional battlefield strategy and tactics. They camouflage their identity and activity in the vast, open, and often undefended spaces of the Internet. Their reconnaissance capabilities are both varied and effective. They constantly probe for weaknesses, an authorized point of entry, and a crack in the defenses. They often use low-tech weapons to inflict damage, yet they are able to design and build high-tech weapons to overcome specific defenses and hit specific targets.”

As institutions move more towards a complete end-to-end digital experience it will require them to monitor the digital footprint of their customers, counterparties, and service providers to ensure nothing has been compromised. Today, some internet marketplace companies maintain a list of internet protocol (IP) addresses, either on their own or with the help of a vendor, known to be compromised or associated with cyber crime groups to avoid fraudulent charges, account takeovers, and other forms of cyber attacks.

For example, if a credit card was issued by a bank in the US, but the IP address associated with the online transaction was coming from another country overseas it could increase the risk of fraud, assuming the individual was not travelling.

There have been cases of fraudsters impersonating an executive of an existing customer by calling up the wire room of a financial institution and requesting a high priority wire to be sent overseas. This is a low-tech form of fraud because the fraudster used social engineering to convince the bank’s employee that they were a legitimate representative from the company. The fact that the fraudster had some much information regarding the bank’s operations, customer’s account, and the customer’s organizational structure which made the scheme that much more believable.

This is another serious risk for business-to-business (B2B) commerce because with all of the data breaches happening, its relatively straightforward to gather or purchase the confidential information needed to conduct an effective B2B fraud.

Cybersecurity has become a core part of third party risk management as an potential business partner’s cyber resiliency could impact the decision making process. The 2013 data breach of Target’s systems which compromised 40 million credit and debit card numbers highlight the fact that in terms of entity due diligence, cyberattacks are a serious risk to third party risk management. Target’s systems were reportedly compromised by first stealing the credentials of a Heating and Ventilation company (HVAC) in Pittsburgh. Target used computerized heating and ventilation systems and it was apparently more cost effective to allow remote access to its systems to a third party rather than hire a full time employee on-site.

The risks posed by cyberattacks are forcing organizations to rethink how they engage with customers and other businesses in their supply chain. The future of a robust entity due diligence process will have to include an assessment of cyber resiliency which could include cybersecurity insurance policies, but this industry is still in its infancy due to a lack of comprehensive standards when assessing cyber risk exposure. This is beginning to change in the US based on the NYDFS cybersecurity part 500 rule will require covered entities to submit a certification attesting to their compliance with the regulation beginning on February 15, 2018.

Technology and Innovation

The story of human history could be traced back to technological innovations that allowed for early humans to exert greater control over the environment to meet their needs or desires. The most basic technological innovation was the discovery and control of fire which allowed for warmth, protection, cooking, and led to many other innovations. Scientists found evidence at a site in South Africa that Homo erectus was the first hominin to control fire around 1 million years ago.

If, we consider ideas as an invention or innovation then the next big shift in human history was the concept of agriculture and the domestication of animals which laid the foundation for civilization to flourish. Mesopotamia was the cradle of civilisation as no known evidence for an earlier civilization exists. Mesopotamia was a collection of cultures situated between the Tigris and Euphrates rivers, corresponding mostly to today’s Iraq, to support irrigation and existed between 3300 BC – 750 BC. One theory also credits Mesopotamia with another revolutionary innovation, the wheel, based on the archaeologist, Sir Leonard Woolley, discovery of remains of two wheeled wagons at the site of the ancient city of Ur.